Shahrukh Khan

LLM Tokenizations: Understanding Text Tokenizations for Transformer-based Language Models

25 December, 2023

- Introduction

- Tokenization Pipelines in Practice

- Word Tokenization

- Character-level Tokenization

- Subword Tokenization

- Summary

Introduction

As it is established practice that Computers represent the data digitally in the binary format. Similarly, Large Language Models (LLMs) do not directly process the text provided by the users in the shape of a prompt. The user prompts are passed through a multi-stage pre-processing pipeline prior to being fed to a LLM. Furthermore, you may have came across the “tokens” while navigating the pricing pages of the popular commercial LLM service providers.

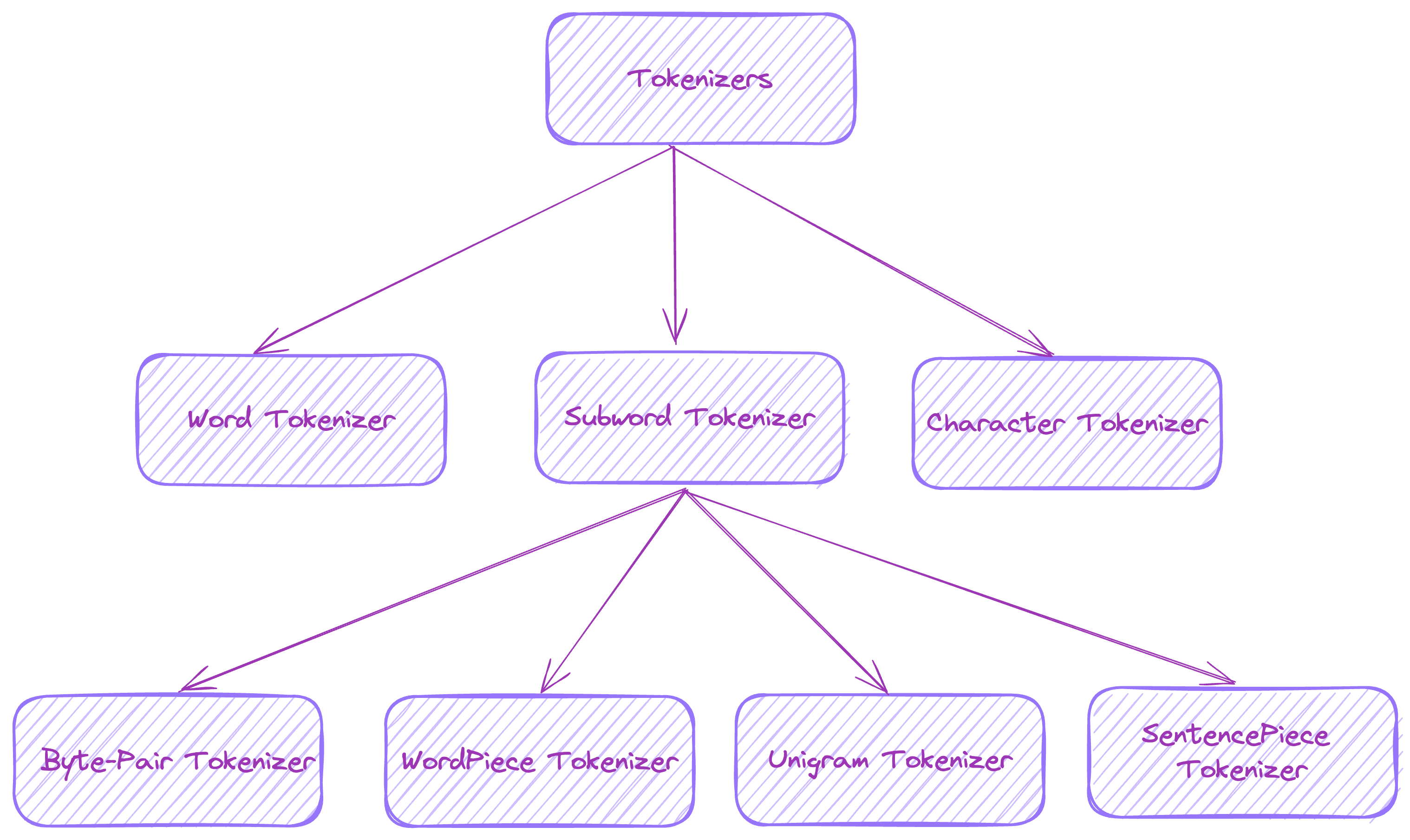

In this post, we will expolore various tokenization mechanisms. Including the ones used by the main stream LLMs including LLAMA, GPT, BERT etc. Alongside the simulated tokenization dry runs, we will also go over the implementation of the such tokenization algorithms alongside. We can begin by looking the tokenizers tree, which is composed of most prominent tokenization algorithms.

💡 It is pertinent to note that tokenization process directly influences the items in a vocabulary (formally called word types)of a language model alongside the size of the vocabulary. Additionally, each word type from the vocabulary corresponds to its counterpart word embedding latent vectors in the embedding matrix. Concretely, the size of vocabulary is directly proportional to number of embedding vectors in the embedding matrix of a language model. Thereby, you may also prune the vocabulary to discard the infrequent word types post-tokenization to reduce the size of the embedding matrix (which contains trainable weights).

Tokenization Pipelines in Practice

Before we delve into aforementioned tokenization mechanism. It is important to highlight that tokenizers in practice are not used stand-alone on raw input. Rather specialized pre-processing and post-processing steps are performed to standardize tokenization results. This becomes even more critical when processing multi-lingual corpora.

Normalization

The normalization process includes basic tidying tasks like eliminating unnecessary spaces, converting to lowercase, and/or eliminating accents. If you’re acquainted with Unicode normalization (like NFC or NFKC), the tokenizer might also implement this step. Below is a simple example of a normalizer from Huggingface tokenizers.

from transformers import AutoTokenizer

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

bert_normalizer = tokenizer.backend_tokenizer.normalizer

print(bert_normalizer.normalize_str("Héllò hôw are ü?"))

Output:

'hello how are u?'

Pre-tokenization

Training a tokenizer solely on raw text is not feasible. To address this, we use pre-tokenization to initially divide the text into smaller entities, such as words. As discussed later, a word-based tokenizer can simply split a raw text into words on whitespace and punctuation. The resulting words become the boundaries of the subtokens the tokenizer can learn during its training.

Pre-tokenization: BERT vs GPT vs T5

Below is a simple comparison taken from Huggingface NLP course.

from transformers import AutoTokenizer

# BERT pre_tokenize

tokenizer = AutoTokenizer.from_pretrained("bert-base-uncased")

tokenizer.backend_tokenizer.pre_tokenizer.pre_tokenize_str("Hello, how are you?")

# Output:

[('Hello', (0, 5)), (',', (5, 6)), ('how', (7, 10)), ('are', (11, 14)), ('you', (16, 19)), ('?', (19, 20))]

# GPT pre_tokenize

tokenizer = AutoTokenizer.from_pretrained("gpt2")

tokenizer.backend_tokenizer.pre_tokenizer.pre_tokenize_str("Hello, how are you?")

# Output:

[('Hello', (0, 5)), (',', (5, 6)), ('Ġhow', (6, 10)), ('Ġare', (10, 14)), ('Ġ', (14, 15)), ('Ġyou', (15, 19)),

('?', (19, 20))]

# T5 pre_tokenize

tokenizer = AutoTokenizer.from_pretrained("t5-small")

tokenizer.backend_tokenizer.pre_tokenizer.pre_tokenize_str("Hello, how are you?")

# Output:

[('▁Hello,', (0, 6)), ('▁how', (7, 10)), ('▁are', (11, 14)), ('▁you?', (16, 20))]

Key Highlights:

| Tokenizer | Splitting Mechanism | Whitespace inclusion |

|---|---|---|

| BERT | Whitespace and punctuation | Ignores |

| GPT | Whitespace and punctuation | Replaces with Ġ |

| T5 | Whitespace only | Replaces with _ and also prepends _ at the beginning of the sequence |

Word Tokenization

The most trivial form of tokenization is based on the splitting the text by space. For instance, you have the text Don't you love 🤗 Transformers? We sure do.. The word-level space-based tokenizer will split it into tokens ["Don't", "you", "love", "🤗", "Transformers?", "We", "sure", "do."]. If you notice closely, the space-based tokenizer does not split compound words such as Don't, however, Don't corresponds to do not. Hence, in practice NLP libraries like spacy and Moses implement word-level tokenizers as a combination of rule and space-based approaches. Thereby, these rule-based tokenizers will produce the following tokens for the same input text ["Do", "n't", "you", "love", "🤗", "Transformers", "?", "We", "sure", "do", "."].

Despite, the fact these tokenizers do a reasonable job at segregating such compound words. In general, word-level tokenizers result in large vocabularies given a large copora. For instance, Transformer XL uses space and punctuation tokenization and has a vocabulary size of 267,735! Consequently, as described above this results in a huge embedding matrix increasing the complexity and compute requirements for pre-training a language model. A rule of thumb for a monolingual transformer-based language model should have a vocabulary size approximately around 50,000.

Another downside of the word-level tokenizer is its inability to handle out-of-vocabulary words at test time. This means we don’t have a word embedding for that word and thus cannot process the input sequence. A typical solution entails, that all such occurrences of words are typically mapped to a special <UNK> token. Such mapping can potentially result in loss of useful information when processing a text sequence by the language model. Similarly, Word-level tokenization treats different forms of the same word (e.g., “open”, “opened”, “opens”, “opening”, etc) as separate types thus, resulting in separate embeddings for each.

We will use the following copus for all the tokenizer implementations:

corpus = [

"The sleek black cat gracefully leaps over the sleeping dog.",

"A nimble gray squirrel effortlessly vaults across the backyard fence.",

"In the quiet forest, a small rabbit dashes past the resting hare.",

"With a swift motion, the agile kangaroo hops over the dozing koala.",

"A fast and agile cheetah sprints across the savannah, leaving dust in its wake."

]

Whitespace Word-level tokenizer implementation

def whitespace_word_level_tokenizer(sequence: str) -> list[str]:

return sequence.split()

result = list(map(whitespace_word_level_tokenizer, corpus))

print(result)

Output:

[['The', 'sleek', 'black', 'cat', 'gracefully', 'leaps', 'over', 'the', 'sleeping', 'dog.'], ['A', 'nimble', 'gray', 'squirrel', 'effortlessly', 'vaults', 'across', 'the', 'backyard', 'fence.'], ['In', 'the', 'quiet', 'forest,', 'a', 'small', 'rabbit', 'dashes', 'past', 'the', 'resting', 'hare.'], ['With', 'a', 'swift', 'motion,', 'the', 'agile', 'kangaroo', 'hops', 'over', 'the', 'dozing', 'koala.'], ['A', 'fast', 'and', 'agile', 'cheetah', 'sprints', 'across', 'the', 'savannah,', 'leaving', 'dust', 'in', 'its', 'wake.']]

Rule-based Word-level tokenizer implementation

import re

def rule_based_word_level_tokenizer(sequence: str) -> list[str]:

"""The rules include to replace any special characters other than alphanumeric with a whitespace. Then only extract whitespace separated words."""

preprocessed_sequence = re.sub(r'\W+', ' ', sequence)

return re.findall(r'\b\w+\b|[^\w\s]', preprocessed_sequence)

result = list(map(rule_based_word_level_tokenizer, corpus))

print(result)

Output:

[['The', 'sleek', 'black', 'cat', 'gracefully', 'leaps', 'over', 'the', 'sleeping', 'dog'], ['A', 'nimble', 'gray', 'squirrel', 'effortlessly', 'vaults', 'across', 'the', 'backyard', 'fence'], ['In', 'the', 'quiet', 'forest', 'a', 'small', 'rabbit', 'dashes', 'past', 'the', 'resting', 'hare'], ['With', 'a', 'swift', 'motion', 'the', 'agile', 'kangaroo', 'hops', 'over', 'the', 'dozing', 'koala'], ['A', 'fast', 'and', 'agile', 'cheetah', 'sprints', 'across', 'the', 'savannah', 'leaving', 'dust', 'in', 'its', 'wake']]

Above were some toy examples of world-level tokenizers. Additional steps include creating a vocabulary consisting of set of tokens post-tokenization. Thereafter, language models learn a unique embedding per vocabulary item encampasulating the semantic meaning of the token. However, as discussed above word-level tokenization has inherent limitations including large resulting vocabularies, inability to deal with out-of-vocabulary tokens at test time etc.

Character-level Tokenization

Character-level tokenization alleviates the out-of-vocabulary problem of the word-level tokenization. It solves this by building the vocabulary based on all possible single characters possible for a given natural language. Hence, you can always form any word by putting together individual characters from the vocabulary even when you have misspellings in the input text. Additionally, the vocabulary size becomes much more smaller, for instance, for English language you can potentially have 170,000 unique words. Whereby, to represent the same words you would only require 256 characters. Hence, drastically reducing vocabulary size and computational complexity.

While character-based tokenization provides an elegant solution for gracefully handling out-of-vocabulary terms. Character-based tokenization present its own set of challenges such as characters don’t hold more contextual information than individual words. The resulting tokenized sequences are much longer than word-level tokenization due to the fact that each character in your input is a distinct token. Resultantly, the language model is required to deal with longer input contexts. Furthermore, to learn semantic representations at word-level you need to explicitly pool information for each word within the language model. Let’s have quick look at a naive character-level tokenizer implementation below.

Character-level tokenizer implementation

def character_level_tokenizer(sequence: str) -> list[str]:

return list(sequence)

result = list(map(character_level_tokenizer, corpus))

print(result)

Output:

[['T', 'h', 'e', ' ', 's', 'l', 'e', 'e', 'k', ' ', 'b', 'l', 'a', 'c', 'k', ' ', 'c', 'a', 't', ' ', 'g', 'r', 'a', 'c', 'e', 'f', 'u', 'l', 'l', 'y', ' ', 'l', 'e', 'a', 'p', 's', ' ', 'o', 'v', 'e', 'r', ' ', 't', 'h', 'e', ' ', 's', 'l', 'e', 'e', 'p', 'i', 'n', 'g', ' ', 'd', 'o', 'g', '.'], ['A', ' ', 'n', 'i', 'm', 'b', 'l', 'e', ' ', 'g', 'r', 'a', 'y', ' ', 's', 'q', 'u', 'i', 'r', 'r', 'e', 'l', ' ', 'e', 'f', 'f', 'o', 'r', 't', 'l', 'e', 's', 's', 'l', 'y', ' ', 'v', 'a', 'u', 'l', 't', 's', ' ', 'a', 'c', 'r', 'o', 's', 's', ' ', 't', 'h', 'e', ' ', 'b', 'a', 'c', 'k', 'y', 'a', 'r', 'd', ' ', 'f', 'e', 'n', 'c', 'e', '.'], ['I', 'n', ' ', 't', 'h', 'e', ' ', 'q', 'u', 'i', 'e', 't', ' ', 'f', 'o', 'r', 'e', 's', 't', ',', ' ', 'a', ' ', 's', 'm', 'a', 'l', 'l', ' ', 'r', 'a', 'b', 'b', 'i', 't', ' ', 'd', 'a', 's', 'h', 'e', 's', ' ', 'p', 'a', 's', 't', ' ', 't', 'h', 'e', ' ', 'r', 'e', 's', 't', 'i', 'n', 'g', ' ', 'h', 'a', 'r', 'e', '.'], ['W', 'i', 't', 'h', ' ', 'a', ' ', 's', 'w', 'i', 'f', 't', ' ', 'm', 'o', 't', 'i', 'o', 'n', ',', ' ', 't', 'h', 'e', ' ', 'a', 'g', 'i', 'l', 'e', ' ', 'k', 'a', 'n', 'g', 'a', 'r', 'o', 'o', ' ', 'h', 'o', 'p', 's', ' ', 'o', 'v', 'e', 'r', ' ', 't', 'h', 'e', ' ', 'd', 'o', 'z', 'i', 'n', 'g', ' ', 'k', 'o', 'a', 'l', 'a', '.'], ['A', ' ', 'f', 'a', 's', 't', ' ', 'a', 'n', 'd', ' ', 'a', 'g', 'i', 'l', 'e', ' ', 'c', 'h', 'e', 'e', 't', 'a', 'h', ' ', 's', 'p', 'r', 'i', 'n', 't', 's', ' ', 'a', 'c', 'r', 'o', 's', 's', ' ', 't', 'h', 'e', ' ', 's', 'a', 'v', 'a', 'n', 'n', 'a', 'h', ',', ' ', 'l', 'e', 'a', 'v', 'i', 'n', 'g', ' ', 'd', 'u', 's', 't', ' ', 'i', 'n', ' ', 'i', 't', 's', ' ', 'w', 'a', 'k', 'e', '.']]

Subword Tokenization

To get the best of both worlds, transformer-based language models leverage subword tokenization technique which combines both character-level and word-level tokenization mechanisms. Interestingly, the original idea was developed for machine translation by Sennrich et al., ACL 2016.

💡 "The main motivation behind this paper is that the translation of some words is transparent in that they are translatable by a competent translator even if they are novel to him or her, based on a translation of known subword units such as morphemes or phonemes."

The key principle of subword tokenization entails applying word-level tokenization to more frequent terms. Whereby, the rather rare terms are decomposed into smaller frequent terms. For instance, the word annoyingly can potentially be decomposed into two words annoying and ly. Hence, this keeps in check the input tokenized sequence length (not decomposing frequent terms), whilst also providing means to handle out-of-vocbulary terms (decomposition of rare terms).

Concretely, the subword toeknization has multiple implementation flavors. Below we will go over the most commonplace flavors used by some of the mainstream language models.

Byte-Pair (BPE) Tokenization

BPE is used by a lot of Transformer models, including GPT, GPT-2, RoBERTa, BART, and DeBERTa. BPE tokenization involves a training step at the beginning. Unlike the machine learning model the training process entails computing statistical measures to construct a meaningful and robust vocabulary whilst also applying pre-processing (normalization and pre-tokenization) and post-processing steps. For instance, for the toy corpus below the base vocabulary will consist of {“b”, “g”, “h”, “n”, “p”, “s”, “u”}.

"hug", "pug", "pun", "bun", "hugs"

For real-world cases, the base vocabulary will include all Unicode characters at the beginning. In that case, if the tokenizer encounters a character that was not a member of training corpus, it will be mapped to unknown token. This is the reason behind why most NLP models struggle with anlyzing sequences with emojis present.

💡 The GPT-2 and Roberta tokenizers directly use byte-level representations of all characters as the base vocbulary with size 256. Thereby, this initial trick encompasses all possible character and allows to avoid out-of-vocabulary situation. This trick is called byte-level BPE.

Step 0:

After forming the base vocabulary, BPE tokenizer looks at each pair of consecutive tokens and concatenates the most frequent token-pair token and adds the concatenated token-pair to base vocabulary and replaces all the consecutive occurences of both tokens with their concatenated version. Here, + indicates the token-boundary based on the current state of vocabulary. Since, at the beginning we only have characters in the base vocabulary thus, here’s how things would look like at the beginning.

| Word | Frequency |

|---|---|

| h+u+g | 10 |

| p+u+g | 5 |

| p+u+n | 12 |

| b+u+n | 4 |

| h+u+g+s | 5 |

Vocbulary: {"b", "g", "h", "n", "p", "s", "u"}

Step 1:

After the first pass we can observe the token-pair u+g occurs 20 times in the entire corpus, hence, we concatenate and replace in corpus, add to the vocabulary.

| Word | Frequency |

|---|---|

| h+ug | 10 |

| p+ug | 5 |

| p+u+n | 12 |

| b+u+n | 4 |

| h+ug+s | 5 |

Vocbulary: {"b", "g", "h", "n", "p", "s", "u", "ug"}

Step 2:

In this iteration we find that u+n has the highest frequecy of 16.

| Word | Frequency |

|---|---|

| h+ug | 10 |

| p+ug | 5 |

| p+un | 12 |

| b+un | 4 |

| h+ug+s | 5 |

Vocbulary: {"b", "g", "h", "n", "p", "s", "u", "ug", "un"}

Step 3:

In this iteration we find that h+ug has the highest frequecy of 15.

| Word | Frequency |

|---|---|

| hug | 10 |

| p+ug | 5 |

| p+un | 12 |

| b+un | 4 |

| hug+s | 5 |

Vocbulary: {"b", "g", "h", "n", "p", "s", "u", "ug", "un", "hug"}

The above process is repeated again until a certain criteria (desired vocabulary size is reached) is met or till fixed number of steps.

WordPiece Toekenization

WordPiece has also been reused in quite a few Transformer models based on BERT, such as DistilBERT, MobileBERT, Funnel Transformers, and MPNET. WordPiece tokenizer is quite similar to the BPE tokenizer while having two distinctive differences:

- Intermediate characters in have the prepended prefix

##. - Merge of each token-pair is based on greater likelihood a given token-pair is likely to appear in a sequence in the corpus. In other words the algorithm prioritizes the merging of a token-pair where the individual parts are less frequent in the vocabulary. More formally given by for

itoken-pair with individualjandkas tokens:

Step 0:

Hence, as per the above distictions the previously mentioned corpus and the base vocubalary will look as follows at the beginning of the tokenizer training process.

| Word | Frequency |

|---|---|

| h+##u+##g | 10 |

| p+##u+##g | 5 |

| p+##u+##n | 12 |

| b+##u+##n | 4 |

| h+##u+##g+##s | 5 |

Vocbulary: {"b", "h", "p", "##g", "##n", "##s", "##u"}

Step 1:

The highest score after the first iteration will go to the token-pair ##g+##s with the highest score being 1/20. Whereby, all other token-pairs have ##u in them which deflates the scores of all other token-pairs by the factor 1/36.

| Word | Frequency |

|---|---|

| h+##u+##g | 10 |

| p+##u+##g | 5 |

| p+##u+##n | 12 |

| b+##u+##n | 4 |

| h+##u+##gs | 5 |

Vocbulary: {"b", "h", "p", "##g", "##n", "##s", "##u", "##gs"}

Step 2:

At this point all the token-pairs have the identical scores because all of them include ##u as one part of the pair. Let’s say we merge h+##u first.

| Word | Frequency |

|---|---|

| hu+##g | 10 |

| p+##u+##g | 5 |

| p+##u+##n | 12 |

| b+##u+##n | 4 |

| hu+##gs | 5 |

Vocbulary: {"b", "h", "p", "##g", "##n", "##s", "##u", "##gs", "hu"}

Step 3:

After this iteration we observe the maximum score for the token pair hu+##gs with score being 1/15.

| Word | Frequency |

|---|---|

| hu+##g | 10 |

| p+##u+##g | 5 |

| p+##u+##n | 12 |

| b+##u+##n | 4 |

| hugs | 5 |

Vocbulary: {"b", "h", "p", "##g", "##n", "##s", "##u", "##gs", "hu", "hugs"}

Similarly to the BPE tokenization, above process is repeated again until a certain criteria (desired vocabulary size is reached) is met or till fixed number of steps.

Unigram Tokenization

The Unigram algorithm is often used in SentencePiece, which is the tokenization algorithm used by models like AlBERT, T5, mBART, Big Bird, and XLNet. However, unlike BPE and WordPiece tokenizers which build the vocabulary from bottom-up the Unigram tokenization algorithm starts with large base vocabulary and prunes it until the point where desired vocabulary size is reached.

💡 Unigram is not used directly for any of the models in the transformers, but it’s used in conjunction with SentencePiece.

To determine word types which are eligible to be removed from the vocabulary the Unigram algorithm computes a loss over the entire corpus given the vocabulary at current step. Then for each word type in the vocabulary it determines the word types which increase the loss the least if they are removed from the vocabulary. Since, they have less influence on the overall loss over the corpus hence they are deemed to be less important. Then at the end of each step Unigram removes p (with p usually being 10% or 20%) percent of the word types which had the lowest influence on the loss. Additionally, the base vocabulary can be built using either with all possible substring combinations for each word in the corpus or using another tokenization algorithm like BPE.

The loss for Unigram tokenizer is defined as follows, here N corresponds to the number of unique words in the corpus, \(S(x_i)\) refers to all possible tokenizations of word \(x_i\)

Training the Unigram entails training a Unigram language model which is a probablistic language model which always generates the most frequent token over and over again if used for text generation with greedy decoding. Also, the probability of a word at a given position is independent of the previous context and is just the probability of that word. That probability can simply be computed by the following position for a token at ith position, where V is the size of the vocabulary:

\[p_i = \frac{count(token_i)}{\sum_{j=1}^{V} count(token_j)}\]Now for instance in the case of our infamous toy corpus which used in other tokenization algorithms the denominotor of the above formula will always be 210 as that is the sum of all word frequencies. Whereby, frequencies for each word type in the vocabulary which is strictly composed of all substrings are given below: <table class=“tg”>

</table>

As an example let’s take a look at how we would compute the probability scores for the word “pug”.

\(P(["p", "u", "g"]) = \frac{17}{210} \times \frac{36}{210} \times \frac{20}{210} = 0.000389\)

\(P(["pu", "g"]) = \frac{17}{210} \times \frac{20}{210} = 0.0022676\)

\(P(["p", "ug"]) = \frac{17}{210} \times \frac{20}{210} = 0.0022676\)

The rule of thumb here is that tokenization with least number of tokens will result in higher probability score than the one with more tokens. In case of a tie we keep the tokenization which we encounter first (note that in a larger corpus, equality cases like this will be rare).

Excursion: Optimal Implementation for Unigram Loss

For a large corpus it’d be computationally expensive to determine all possible tokenization when computing the loss for the Unigram algorithm. Here, we can leverage Viterbi algorithm (a nice youtube tutorial explaining the concept) which builds a graph to detect the most feasible tokenization at position and discard all other tokenization reaching until the given position.

Let’s take a look at an example using our vocabulary and the word “unhug”. For each position, the subwords with the best scores ending there are the following:

- Character 0 (u): “u” (score 0.171429)

- Character 1 (n): “un” (score 0.076191)

- Character 2 (h): “un” “h” (score 0.005442)

- Character 3 (u): “un” “hu” (score 0.005442)

- Character 4 (g): “un” “hug” (score 0.005442)

Back to training Unigram Tokenizer

Once the tokenization is finished for our original corpus we will get a score for each word which can be plugged into the loss function, as a reminder below is our corpus:

| Word | Frequency |

|---|---|

| hug | 10 |

| pug | 5 |

| pun | 12 |

| bun | 4 |

| hugs | 5 |

The tokenization of each word with their respective scores is:

"hug": ["hug"] (score 0.071428)

"pug": ["pu", "g"] (score 0.007710)

"pun": ["pu", "n"] (score 0.006168)

"bun": ["bu", "n"] (score 0.001451)

"hugs": ["hug", "s"] (score 0.001701)

So the loss is:

10 * (-log(0.071428)) + 5 * (-log(0.007710)) + 12 * (-log(0.006168)) + 4 * (-log(0.001451)) + 5 * (-log(0.001701)) = 169.8

Now which tokens shall we remove without increasing the loss. We saw above that for word “pug” both segmentations pu+g and p+ug yielded equivalent scores. Hence, we can safely remove from the above segmentations the term pu since for pun we also do have the token un hence, the overall loss won’t affected over the given corpus. Whereby, if we drop the token hug, then this would tokenize the word “hug” and “hugs” as follows:

"hug": ["hu", "g"] (score 0.006802)

"hugs": ["hu", "gs"] (score 0.001701)

Whereby the overall loss over the corpus would increase by:

- 10 * (-log(0.071428)) + 10 * (-log(0.006802)) = 23.5

Hence, removing hug word type from the vocabulary is won’t be a great choice wrt the Unigram loss.

SentencePiece Tokenization

Langauge models using SentencePiece are ALBERT, XLNet, Marian, and T5. The issue with above described tokenization algorithms lies in the assumption that input text is delimited by spaces, which doesn’t hold true for all languages. A potential remedy involves employing language-specific pre-tokenizers, such as XLM’s pre-tokenizer for Chinese, Japanese, and Thai. For a more comprehensive solution, SentencePiece (Kudo et al., 2018) offers a language-independent approach. It treats input as a raw stream, encompassing spaces within the vocbulary set, and utilizes the BPE or unigram algorithm to construct an appropriate vocabulary.

💡 All transformers models in the library that use SentencePiece use it in combination with unigram.